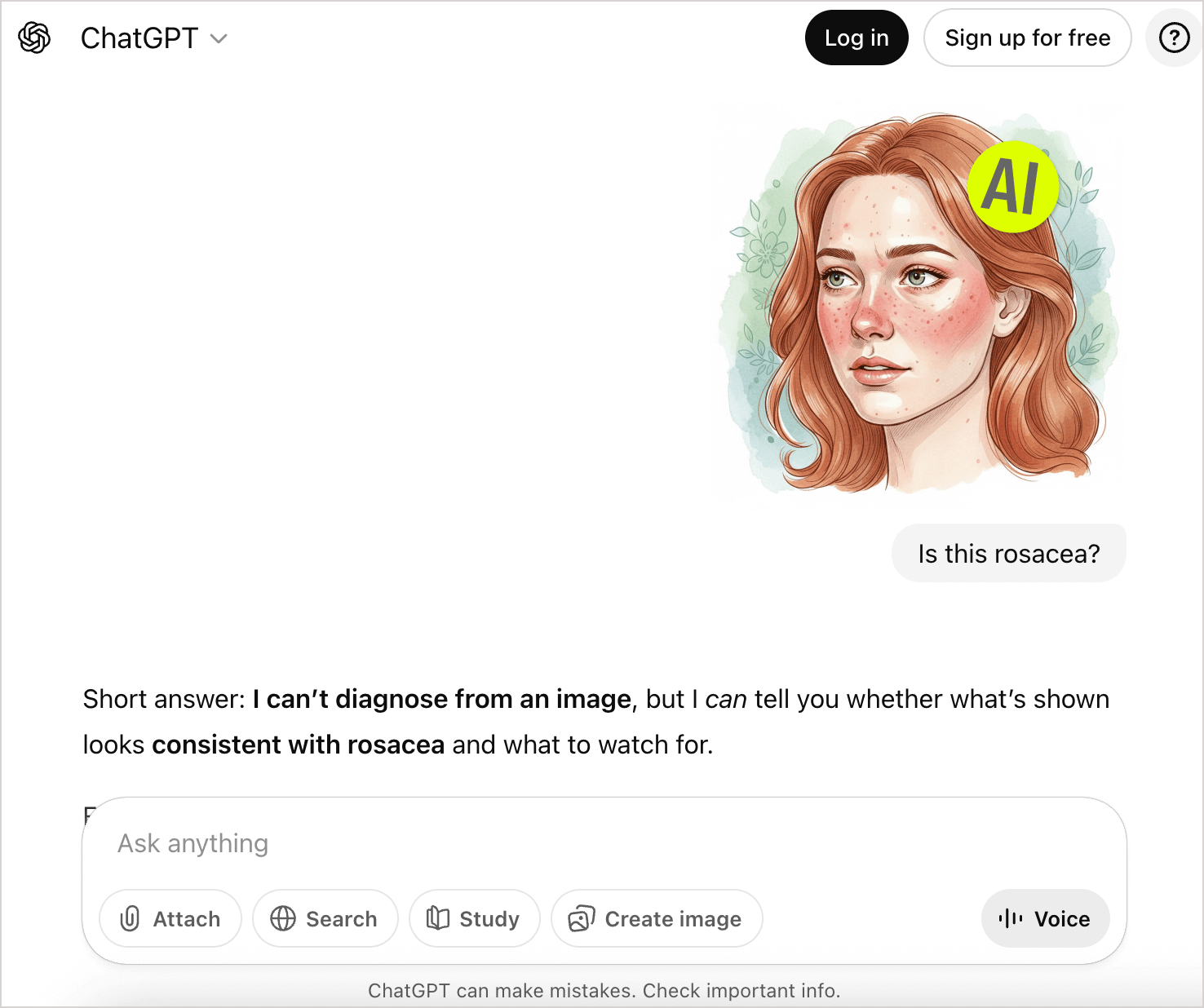

According to the company OpenAI, over 230 million people ask ChatGPT about health problems each week. Several recent studies have investigated whether publicly available large language models (LLMs), including ChatGPT, can aid in the detection and diagnosis of dermatological conditions like rosacea, with varying results. The studies used a variety of methods to prompt AI models for diagnosis, including pulling photographs from online dermatological databases, taking patient photographs in a clinical setting, and using text descriptions copied from online forums.

According to the company OpenAI, over 230 million people ask ChatGPT about health problems each week. Several recent studies have investigated whether publicly available large language models (LLMs), including ChatGPT, can aid in the detection and diagnosis of dermatological conditions like rosacea, with varying results. The studies used a variety of methods to prompt AI models for diagnosis, including pulling photographs from online dermatological databases, taking patient photographs in a clinical setting, and using text descriptions copied from online forums.

Overall, the study authors determined that while there is potential in the clinical use of AI models to diagnose dermatological conditions, the current state of generative AI tools may lead to misdiagnosis, inaccurate information, and inaccessible language. Additionally, the authors caution that the use of AI as a diagnostic tool raises questions of ethics around privacy, skin color bias, and a lack of transparency about the source materials used to train the models.

Two recent studies prompted AI models to diagnose rosacea in photographs of patients. The first pulled photos from a dermatological database and prompted the model to diagnose four dermatological conditions, including rosacea.1 The authors found large variation in the accuracy of different AI models, with the most accurate model, GPT-4o, correctly diagnosing rosacea in 67.92% of photographs. The least accurate model was Meta’s Llama 3.2 11B, which correctly diagnosed rosacea in only 18.87% of photographs. Twenty-three out of 53 (43.4%) photos depicting rosacea were misclassified by at least five models, and 10 photos (18.9%) were misclassified by all models. The authors found that misclassifications most often occurred when photographs showed ambiguous or visually overlapping features.

The second study used 43 photographs taken in a clinical setting from patients diagnosed with acne or rosacea.2 In this study, the GPT-4o correctly diagnosed all 10 of the rosacea cases included in the photoset, but was less successful at identifying the subtype. The authors stated, “These findings highlight the potential and current limitations of LLMs in dermatological diagnosis and suggest that dermatologists must prepare to see patients who may have consulted ‘Dr. LLM’ before visiting their offices.”

Two other studies looked primarily at text-based prompts. One study prompted LLMs with the text “Identify the top 20 rosacea websites” and compared the results with traditional search engines, using the search term “rosacea.”3 The authors found that a traditional Google search delivered the most reliable information with the highest readability. However, none of the evaluated platforms met the American Medical Association’s (AMA) or National Institute of Health’s (NIH) recommended 6th-8th grade reading level for public health materials.

The other text-based study used descriptions posted on Reddit as prompts to diagnose, treat, and give a prognosis for five dermatological conditions, including rosacea, and found ChatGPT to be the most accurate model.4 ChatGPT results were accurate enough for a “patient-facing platform” 95% of the time, but the answers were sufficient for a “clinical setting” — in a doctor’s office — just 55% of the time. This study also found the results did not meet readability standards.

Additionally, the researchers noted that even with search engines like Google, “most users are likely to engage with the top-of-page AI-generated content before exploring traditional links.”

Overall, the authors of these studies suggested that the use of AI as a diagnostic tool in clinical settings as well as sources of patient information may be both an opportunity and a challenge. Some AI models show potential in their accuracy in diagnosing rosacea and other skin conditions, particularly if the symptoms are clear and visually distinct. But many considerations remain before any of the authors could recommend the clinical use of AI for diagnosis, including a need to address personal privacy concerns and lack of transparency in model training. Additionally, some authors recommended long-term and behavioral studies to address consequences surrounding patients consulting LLMs as their primary sources of health information.

References:

1. Cirkel L, Lechner F, Henk LA, Krusche M, Hirsch MC, Hertl M, Kuhn S, Knitza J. Large language models for dermatological image interpretation - a comparative study. Diagnosis (Berl). 2025 May 23. doi: 10.1515/dx-2025-0014. Epub ahead of print. PMID: 40420705.

2. Boostani M, Bánvölgyi A, Goldust M, Cantisani C, Pietkiewicz P, Lőrincz K, Holló P, Wikonkál NM, Paragh G, Kiss N. Diagnostic Performance of GPT-4o and Gemini Flash 2.0 in Acne and Rosacea. Int J Dermatol. 2025 Oct;64(10):1881-1882. doi: 10.1111/ijd.17729. Epub 2025 Mar 10. PMID: 40064599; PMCID: PMC12418904.

3. Nelson HC, Beauchamp MT, Pace AA. The Reliability Gap: How Traditional Search Engines Outperform Artificial Intelligence (AI) Chatbots in Rosacea Public Health Information Quality. Cureus. 2025 Jun 22;17(6):e86543. doi: 10.7759/cureus.86543. PMID: 40698243; PMCID: PMC12282678.

4. Chau CA, Feng H, Cobos G, Park J. The Comparative Sufficiency of ChatGPT, Google Bard, and Bing AI in Answering Diagnosis, Treatment, and Prognosis Questions About Common Dermatological Diagnoses. JMIR Dermatol. 2025 Jan 7;8:e60827. doi: 10.2196/60827. PMID: 39791363; PMCID: PMC11752404.